When we talk about learning we immediately think of neural networks or chips that simulate the behavior of neurons. But actually use neural networks rather than normal CPUs and normal programming techniques, it only causes differences in response speeds and energy consumption.

Any matrix, or data array, it can store experiences just like neural networks do. Even one going to the limit Turing machine it could run the same algorithms and get the same results, but it would be very slow.

When I was little (32 about years) I naively thought of building computers with electronic components arranged in cells structured like neurons. According to these principles, each of the simulated neurons contains a memory cell which represents the level of activation (called “weight”) and optionally a second cell which represents the gain and determines the output level. And finally, each simulated neuron must contain analog or digital multipliers and links to a certain number of other neurons to send the output signal to..

Today some research groups are producing chip neuromorfici based on these same naive principles and of course these systems work, even a Turing Machine would work. But to build qdroids you need considerably greater efficiency, a quantum leap, a different way of using available electronic resources.

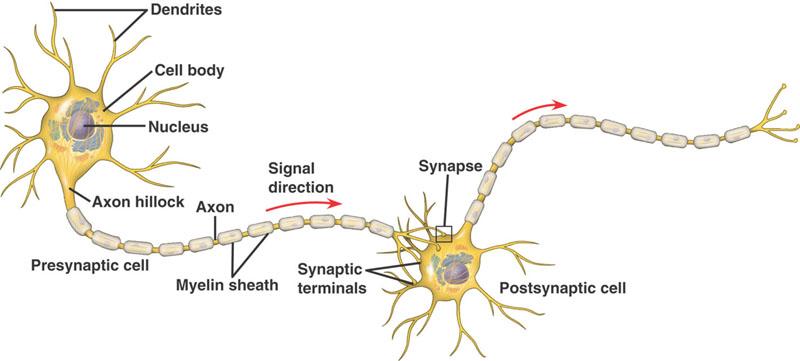

Use electrons and silicon to simulate operation

of beings based on chemical and carbon neurotransmitters

it's not the most efficient solution.

The evolution of living beings based on carbon had molecules at its disposal, chemical messages and DNA and with these building blocks the best solution found was the neurons. But today's silicon-based components are billions of times faster than chemical signals so we need to take these possibilities into account and use them efficiently..

In the future, techniques will be used that we do not even imagine now, but now we have silicon components and the most efficient way to use them is not to force them to simulate what they are not. We just need to add the non-determinism activated by chance and structure them appropriately.

For now, "adding nondeterminism" is just a nice phrase and we have no idea how it will be done, but if it is possible we will succeed. We already have some experience with the non-deterministic programming.

We trust in the help of Professor Anselmi for a feasibility analysis. What we need is your insight into these matters. It is a question of making an approximate statistical calculation that tells us whether it is worthwhile to experiment in this sense or if the random combinations to try and therefore the time required to obtain some results, are so large that any attempt based on classical programming is not recommended a priori.

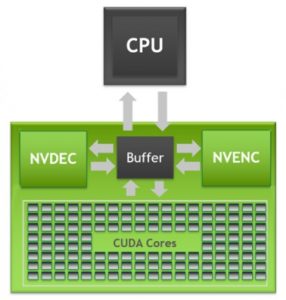

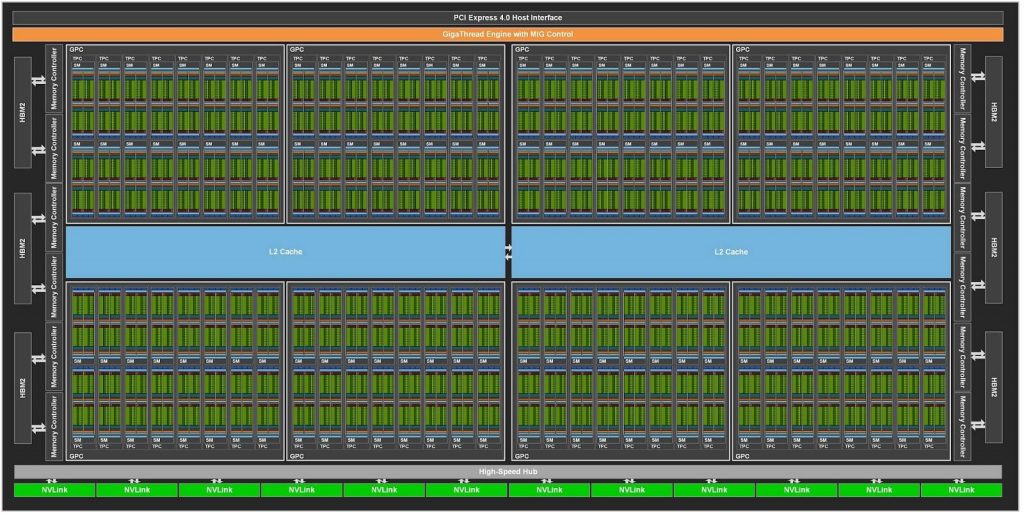

Matrix calculation with video cards

Today's computer CPUs act sequentially and are too slow to store and process large amounts of data in parallel. But fortunately there are video cards that can operate on huge matrices and perform the same operations on a large number of elements at very high speed and simultaneously.. See the documentation of the Cuda Toolkit on NVIDIA.

Some of these cards have 10752 processors (CUDA Colors) and each core contains two processors that can also do floating point operations a 32 bit.

With current technologies this is probably the only possibility we have of obtaining some useful results starting from quantum randomness.

The idea of using the parallel computing capabilities of video cards for neural computing is already widely used, see for example This Page. But current implementations naively try to simulate the functioning of neurons.

Current implementations are inefficient and too slow for qdroids,

as already explained in the previous chapter of this page.